From Simple Chatbots to Sophisticated LLMs: Tracing the Evolution of Language AI

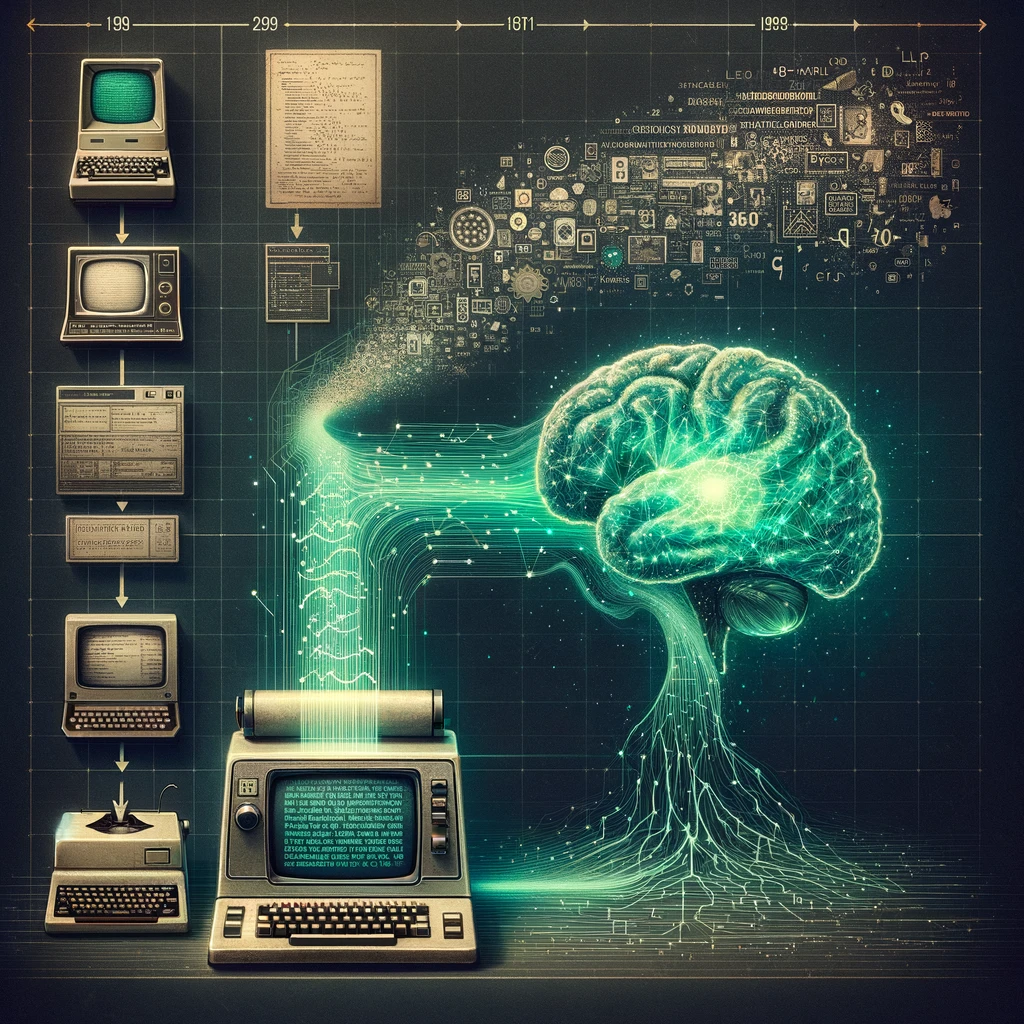

In the ever-evolving landscape of technology, language AI stands as a testament to human ingenuity, bridging the gap between computational logic and the nuanced realm of human communication. At its essence, language AI encompasses the suite of technologies that enable machines to understand, interpret, and generate human language, a cornerstone of artificial intelligence that has transformed how we interact with digital systems. From simple automated responses to complex, context-aware conversations, language AI has opened new frontiers in accessibility, efficiency, and personalization across various sectors, including customer service, content creation, and even education. The journey of language AI from its inception has been nothing short of remarkable. It began with the earliest chatbots of the mid-20th century—rudimentary programs capable of mimicking human conversation through pre-defined rules and patterns. These initial forays laid the groundwork for what was to come, sparking curiosity and ambition among researchers and developers alike. Fast forward to today, and we find ourselves in the era of Large Language Models (LLMs) like GPT-3, which represent the pinnacle of language AI’s evolution. These advanced models, trained on vast swathes of text data, can generate coherent and contextually relevant text, engage in meaningful dialogues, and even produce creative literary works, showcasing a level of linguistic understanding and versatility that was once deemed the exclusive domain of humans. As we delve into the evolution of language AI, from its humble beginnings to the sophisticated LLMs of the modern day, we embark on a fascinating exploration of technological progress, challenges overcome, and the limitless potential that lies ahead. This journey not only highlights the remarkable achievements in the field but also sets the stage for future innovations that will continue to reshape our interaction with the digital world.

The Dawn of Language AI

The inception of language AI traces back to a time when the concept of machines communicating like humans was more science fiction than reality. The early experiments with language AI set the stage for what would become a central pillar of artificial intelligence research and development. Among the first steps in this journey were the creation of rule-based chatbots, which, despite their simplicity, marked significant milestones in the quest to bridge human and machine communication.

Early Chatbots and Their Limitations

In the mid-1960s, the world was introduced to ELIZA, one of the first chatbots developed by computer scientist Joseph Weizenbaum at MIT. ELIZA was designed to mimic conversation by matching user inputs to pre-defined patterns and responding with scripted replies. Its most famous script, DOCTOR, simulated a Rogerian psychotherapist, essentially using mirroring techniques to encourage users to continue talking. Following ELIZA, PARRY, created by psychiatrist Kenneth Colby in the early 1970s, represented another leap forward. PARRY simulated a patient with paranoid schizophrenia, showcasing a more complex attempt at modeling human thought processes and responses.

While ELIZA and PARRY fascinated the public and researchers alike, they also highlighted the limitations of early language AI. Their reliance on rule-based systems meant that conversations were limited to scenarios their creators had anticipated. These chatbots lacked a true understanding of language; they couldn’t grasp context beyond their programmed patterns, leading to nonsensical or irrelevant responses when pushed beyond their limits. The scripted nature of these interactions underscored the chasm between human and machine communication, emphasizing the need for more sophisticated approaches to language understanding and generation.

The Turing Test

Amidst these early explorations of language AI, the theoretical groundwork for evaluating AI’s linguistic capabilities was laid by Alan Turing, a pioneering figure in computer science. In 1950, Turing proposed what is now known as the Turing Test, a criterion for determining a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human. According to the test, if a human interlocutor could not reliably tell the machine apart from a human based on their responses to written questions, the machine could be considered to have achieved human-like intelligence.

The Turing Test set a benchmark for language AI development, pushing researchers to create systems that could understand and generate language in ways that felt genuinely human. It emphasized the importance of context, nuance, and adaptability in conversations—qualities that early chatbots like ELIZA and PARRY were far from achieving but that would become central goals in the evolution of language AI.

The dawn of language AI, with its rudimentary chatbots and theoretical challenges, laid the foundation for a field that would grow exponentially in complexity and capability. From these early beginnings, researchers and developers embarked on a quest to bridge the gap between human and machine communication—a journey that continues to unfold with each advancement in language AI technology.

The Rise of Statistical Models

As the field of language AI matured, the limitations of rule-based systems became increasingly apparent, paving the way for a paradigm shift towards statistical models. This transition, occurring in the late 20th century, marked a significant evolution in natural language processing (NLP), introducing a new approach that leveraged mathematical and probabilistic methods to decipher and generate human language. This shift was not merely a change in technique but a fundamental rethinking of how machines could understand and replicate the complexities of human communication.

Statistical NLP (Natural Language Processing)

Statistical NLP emerged as a response to the constraints of rule-based systems, which struggled to scale and adapt to the vast diversity of human language. Instead of relying on predetermined rules, statistical models utilized algorithms to analyze large datasets of text, learning patterns, associations, and the probabilities of word sequences. This data-driven approach enabled machines to make predictions about language based on the statistical properties learned from these datasets.

The essence of statistical NLP lies in its ability to handle ambiguity and variability in language use, attributes that are inherently human and difficult to codify into rules. By treating language as a statistical phenomenon, these models could generate more fluid and contextually appropriate responses, learn from new inputs, and improve over time. The move towards statistical methods represented a significant leap forward, offering a more flexible and scalable approach to language understanding and generation.

Machine Translation Milestones

One of the most compelling applications of statistical models in language AI is machine translation, which experienced notable advancements during this period. A pivotal development came in the 1990s with IBM’s introduction of Statistical Machine Translation (SMT). This approach broke away from the rule-based models that dominated machine translation efforts until then, using statistical algorithms to translate text from one language to another based on the analysis of bilingual text corpora.

IBM’s SMT and subsequent projects demonstrated that it was possible to achieve meaningful translation not by manually coding linguistic rules, but by letting the system learn these rules from examples. This method significantly improved the quality of machine translation, making it more accessible and effective for a variety of applications, from global communication to the localization of digital content.

The adoption of statistical models and their success in machine translation underscored the potential of data-driven approaches to overcome the challenges of language AI. These developments laid the groundwork for the next evolution in NLP, setting the stage for the emergence of neural networks and deep learning techniques that would further revolutionize the field. The rise of statistical models in language AI marked a turning point, showcasing the power of probability and data in unlocking the intricacies of human language for computational analysis and generation.

Breakthroughs with Neural Networks

The landscape of language AI underwent a monumental transformation with the advent of neural network-based models in the 2010s. This era introduced Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) into the foray of natural language processing (NLP), marking a significant leap in the capabilities of language AI. These neural networks brought about a deeper understanding and more nuanced generation of human language, pushing the boundaries of what machines could achieve in terms of linguistic tasks.

Introduction to Neural Networks

Neural networks are inspired by the structure and function of the human brain, consisting of layers of nodes or “neurons” that process information in a manner reminiscent of biological neural connections. This architecture allows neural networks to learn and make decisions in complex, non-linear ways, making them particularly well-suited for handling the intricacies of human language.

- Recurrent Neural Networks (RNNs): RNNs are a class of neural networks designed to recognize patterns in sequences of data, making them ideal for language tasks. Unlike traditional models, RNNs can use their internal state (memory) to process sequences of inputs, which is crucial for understanding the context and continuity in text. This feature made RNNs particularly revolutionary for tasks involving sentences or longer pieces of text.

- Convolutional Neural Networks (CNNs): Though originally developed for image processing, CNNs have also been applied to NLP tasks. By identifying patterns and structures in text data, CNNs contribute to feature extraction and classification tasks, enhancing the ability of models to interpret and generate language.

Sequence-to-Sequence Models

Building on the foundation laid by RNNs and CNNs, sequence-to-sequence (seq2seq) models represented another significant advancement in language AI. These models are designed to take a sequence of items (like words in a sentence) as input and produce another sequence as output, making them perfect for translation, text summarization, and question-answering.

The introduction of attention mechanisms further refined seq2seq models by allowing the model to focus on different parts of the input sequence when generating each word of the output sequence. This mimics the way humans pay attention to different words when understanding a sentence or generating a response. Attention mechanisms significantly improved the performance of seq2seq models, enabling them to handle longer sequences more effectively and produce outputs that are more coherent and contextually relevant.

The impact of these neural network-based models on language AI has been profound. They not only enhanced the accuracy and fluency of machine-generated text but also opened up new possibilities for AI applications in translation, content creation, and interactive communication. The breakthroughs with neural networks marked a pivotal moment in the evolution of language AI, showcasing the potential of deep learning techniques to capture the subtleties of human language and paving the way for even more sophisticated models like Transformer architectures.

The Era of Transformer Models

The advent of Transformer models in 2017 marked a significant milestone in the evolution of language AI, introducing a novel architecture that fundamentally changed the approach to natural language processing (NLP) and generation. Developed by researchers at Google, the Transformer model showcased an ability to process text in parallel rather than sequentially, significantly speeding up training times and enhancing the model’s understanding of context—a breakthrough that would set the stage for the most advanced language AI systems to date.

The Transformer Architecture

At the heart of the Transformer model is its unique architecture, primarily characterized by the self-attention mechanism. Unlike its predecessors, which processed input data in order, the Transformer can attend to different parts of the input sequence simultaneously. This ability to process words in relation to all other words in a sentence, in parallel, allows the model to capture the context and nuances of language more effectively and efficiently.

The Transformer architecture eschews the recurrent layers found in RNNs and instead relies on stacks of self-attention layers. This design not only facilitates faster training by eliminating the need for sequential data processing but also improves the model’s performance on a wide range of language tasks by enabling it to consider the full context of the input data.

The Emergence of GPT and BERT

The Transformer model’s potential was quickly realized in two landmark language models: OpenAI’s Generative Pre-trained Transformer (GPT) series and Google’s BERT (Bidirectional Encoder Representations from Transformers). Both models leveraged the Transformer architecture to achieve groundbreaking results in language understanding and generation, but they approached the challenge from slightly different angles.

- GPT: The GPT series, starting with GPT-1 and reaching new heights with GPT-2 and GPT-3, focused on generative tasks. These models were pre-trained on vast datasets of text from the internet, learning to predict the next word in a sequence given all the previous words. The result was a model capable of generating coherent and contextually relevant text passages, answering questions, and even writing poetry or code. The scalability of the Transformer architecture allowed GPT-3, in particular, to operate with an unprecedented number of parameters, further enhancing its generative capabilities.

- BERT: Google’s BERT model took a slightly different approach, focusing on bidirectional context and designed primarily to improve understanding rather than generation. BERT was trained to predict missing words in a sentence, considering both the words that come before and after the target word. This bidirectional training allowed BERT to achieve a deep understanding of sentence structure and meaning, setting new standards for tasks like question answering, sentiment analysis, and language inference.

The era of Transformer models has been characterized by rapid advancements and an expanding range of applications. From improving search engine results to powering conversational AI, Transformer models like GPT and BERT have not only advanced the state of the art in language AI but have also made sophisticated natural language understanding and generation more accessible and versatile. As we continue to explore the capabilities of these models, the potential for further innovation in language AI remains vast, promising even more sophisticated and intuitive human-computer interactions in the future.

Current Landscape and Future Directions

The landscape of language AI is currently dominated by state-of-the-art Large Language Models (LLMs) like GPT-3, which have pushed the boundaries of what artificial intelligence can achieve in understanding and generating human language. These advancements have opened up a plethora of applications, transforming how we interact with technology on a daily basis. However, as we venture further into this territory, we encounter both unprecedented opportunities and significant challenges.

State-of-the-Art LLMs

The capabilities of the latest LLMs, particularly models such as GPT-3, are remarkable. These models can generate creative content that rivals human output, from writing compelling articles and stories to composing poetry and music lyrics. In the realm of coding, LLMs assist developers by suggesting code snippets, debugging, and even writing entire programs based on natural language descriptions. Perhaps most impressively, these models are capable of conducting nuanced conversations, answering complex questions, and providing explanations that demonstrate a deep understanding of a wide range of topics.

Applications of these LLMs extend across various sectors, including customer service, where AI chatbots provide more accurate and context-aware responses; content creation, where they generate high-quality, original content at scale; and education, where personalized tutoring systems offer students tailored learning experiences.

Challenges and Ethical Considerations

Despite these advancements, the field of language AI faces ongoing challenges and ethical considerations. One of the primary concerns is the potential for AI to perpetuate or even amplify biases present in the training data, leading to unfair or harmful outcomes. There’s also the issue of misinformation, as the ability of LLMs to generate convincing text could be exploited to produce false or misleading content.

The impact of language AI on the future of work and human-AI interaction raises further ethical questions. As AI becomes more integrated into our lives, ensuring that these technologies augment human capabilities without displacing jobs or eroding personal interactions becomes crucial.

The Future of Language AI

Looking ahead, the future of language AI holds immense potential. We can anticipate more personalized AI assistants that understand individual preferences and contexts, making everyday tasks easier and more efficient. In education, advancements in AI-driven platforms could revolutionize learning by providing highly customized educational content and interactive tutoring systems, making quality education more accessible to students around the world.

Another promising direction is the role of AI in breaking language barriers globally. With continued improvements in translation accuracy and real-time interpretation, language AI could enable more seamless communication across different languages and cultures, fostering greater understanding and collaboration internationally.

As we navigate the current landscape and look to the future, the development of language AI will undoubtedly continue to transform our society in profound ways. Balancing the incredible opportunities these technologies offer with the need to address ethical and societal challenges will be key to ensuring that the evolution of language AI benefits all of humanity. Engaging in open dialogues, conducting responsible research, and implementing thoughtful policies will be essential as we shape the future of language AI together.

Explore 5 Best Large Language Model in 2025

Evolution of Language AI: Conclusion

The journey of language AI from its humble beginnings with basic chatbots like ELIZA and PARRY to the sophisticated Large Language Models (LLMs) of today, such as GPT-3, is nothing short of astonishing. This evolution reflects not only the rapid advancements in technology but also the enduring human quest to create machines that can understand and mimic the complexities of human language. The progression from simple, rule-based programs to advanced models capable of generating creative content, coding, and engaging in nuanced conversations showcases the leaps we have made in bridging the gap between human and machine communication.

The potential of language AI to further transform communication, creativity, and information access is immense. As we stand on the cusp of new breakthroughs, it’s worth reflecting on how these technologies could redefine our interactions with the digital world, enhance our creative endeavors, and make knowledge more accessible across language barriers. The future of language AI promises not only more sophisticated and intuitive interactions with technology but also the opportunity to connect and understand each other better in our increasingly globalized world.

We invite you, our readers, to share your thoughts, experiences, and visions for the future of language AI. Whether you’ve interacted with chatbots, experimented with AI-generated content, or pondered the ethical implications of these technologies, your insights contribute to a richer understanding of language AI’s impact and potential. By fostering a community dialogue on this fascinating topic, we can navigate the challenges and opportunities ahead with collective wisdom and creativity.

The evolution of language AI is a testament to human ingenuity and a reminder of the endless possibilities that lie ahead. Together, let’s explore, innovate, and shape a future where evolution of language AI enriches our lives and expands the horizons of what’s possible.